Aerial Interaction with Tactile Sensing

While autonomous Uncrewed Aerial Vehicles (UAVs) have grown rapidly, most applications only focus on passive visual tasks. Aerial interaction aims to execute tasks involving physical interactions, which offers a way to assist humans in high-risk, high-altitude operations, thereby reducing cost, time, and potential hazards. The coupled dynamics between the aerial vehicle and manipulator, however, pose challenges for precision control. Previous research has typically employed either position control, which often fails to meet mission accuracy, or force control using expensive, heavy, and cumbersome force/torque sensors that also lack local semantic information. Conversely, tactile sensors, being both cost-effective and lightweight, are capable of sensing contact information including force distribution, as well as recognizing local textures. Existing work on tactile sensing mainly focuses on tabletop manipulation tasks within a quasi-static process. In this paper, we pioneer the use of vision-based tactile sensors on a fully-actuated UAV to improve the accuracy of the more dynamic aerial manipulation tasks. We introduce a pipeline utilizing tactile feedback for real-time force tracking via a hybrid motion-force controller and a method for wall texture detection during aerial interactions. Our experiments demonstrate that our system can effectively replace or complement traditional force/torque sensors, improving flight performance by approximately 16% in position tracking error when using the fused force estimate compared to relying on a single sensor. Our tactile sensor achieves 93.4% accuracy in real-time texture recognition and 100% post-contact. To the best of our knowledge, this is the first work to incorporate a vision-based tactile sensor into aerial interaction tasks.

Approach Overview

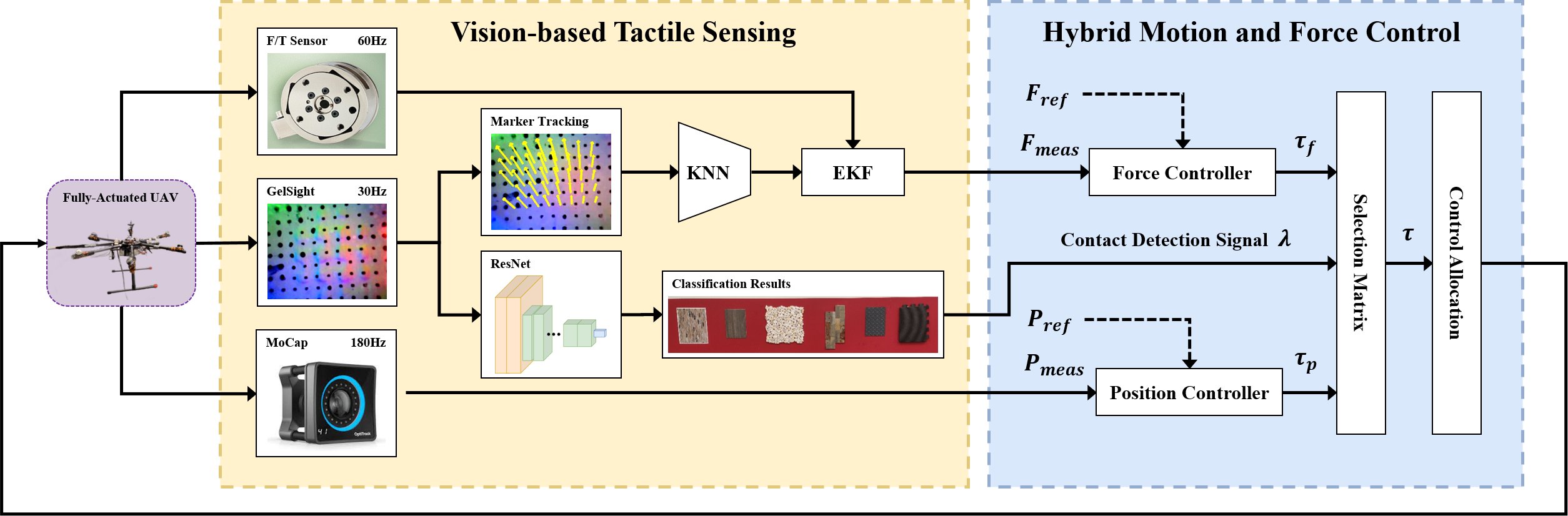

We developed a pipeline that leverages the vision-based tactile sensor GelSight with the fully actuated UAV. The tactile sensor estimates the contact force and recognizes the wall texture during the flight. The estimated contact force, together with the indirect measurement from the base F/T sensor, is then fused via the Kalman filter to estimate the external contact force for force tracking using a hybrid motion-force controller.

Simulator

We developed the simulator of our hexrotor model in Gazebo. We deploy Taxim, an example-based simulation model for GelSight tactile sensors, to Gazebo and incorporate it with our hexrotor simulator in Gazebo. The tactile simulation consists of two parts: contact image simulation, and the markers motion simulation (illustrated as white arrows in the video), corresponding to the GelSight. In terms of contact image simulation, we get the sensor position and contacted surface position to calculate the contact depth. Then the total contact force is read from the Gazebo physical simulator (here we use ODE) to adjust the gel pad deformation depth. The contacted object model, the GelSight-embedded camera, and the LEDs are imposed into Pyrender to render the contact depth image. The image is blurred by Gaussian noise to mimic the real gel deformation and out as the contact tactile image results. In terms of the markers’ motion simulation. We read the contact force and the contact point from the Gazebo physics simulator and use the Taxim example-based method to calculate the force distribution on top of the gel pad surface. The markers’ motions are sampled based on their initial position.

In the future, the simulation will help develop more aggressive control methods for our system.

Experiment

Hybrid motion-force controller

We compare the performance of our hybrid force-motion controller across three scenarios: (1) relying solely on F/T sensor readings, (2) using only vision-based tactile sensor measurements, and (3) employing sensor fusion that combines both types of measurements. The vehicle is directed to approach a wall and sustain a contact force of 5 N upon making contact.

We utilize both the force tracking and the position error to quantify the controller performance. Notably, Scenarios relying solely on either the F/T sensor or the vision-based tactile sensor showed similar ranges of error variance, and using fused force data leads to about a 16% improvement in key performance indicators like RMSE, and standard deviation, compared to only relying on the F/T sensor. In summary, the results strongly indicate that the vision-based tactile sensor can effectively serve as a replacement for, or complement to, traditional F/T sensors, yielding comparable or enhanced flight performance.

Online texture detection

We evaluate the system’s ability to identify wall textures during flight and contact. We focus on identifying the following specific textures: 1) wood grain, 2) marble mosaic, 3) quartz stone, 4) diamond vinyl, and 5) foam mat. Additionally, we include a sixth texture: a printed imitation of a brick pattern on flat paper. Our method achieves 93.4% accuracy in real-time texture recognition. Importantly, the predictions stabilize and self-correct over time, ultimately achieving a post-contact accuracy of 100% for all textures. These results demonstrate that the vision-based tactile sensor can effectively identify 3D textures during aerial contact, suggesting its potential utility in defect detection tasks, such as identifying wing cracks. The tactile sensor works well even when the external lighting condition changes, which is challenging for vision.

Publication

@inproceedings{guo2024aerial,

title={Aerial interaction with tactile sensing},

author={Guo, Xiaofeng and He, Guanqi and Mousaei, Mohammadreza and Geng, Junyi and Shi, Guanya and Scherer, Sebastian},

booktitle={2024 IEEE International Conference on Robotics and Automation (ICRA)},

pages={1576--1582},

year={2024},

organization={IEEE}

}